To determine the overall design margin, a CEI 28G VSR/100 Gigabit Ethernet design required the analysis of 5 million combinations of channel variation, transceiver process and equalization settings. Brute force simulation would have required 278 days, clearly outside the available schedule.

Instead, we used Design of Experiments (DOE) and Response Surface Modeling (RSM) to reduce the number of simulations by a factor of 19,000 and yet produce just as meaningful results.

This paper will demonstrate the application of DOE and RSM to a CEI 28G VSR design. We will show the process of creating a DOE, fitting the data to models, determining the goodness and reliability of the fit and then using the model to perform “what if” analysis, optimize design factors and quantify the impact of manufacturing variation.

Introduction

With a unit interval of only 35.7 ps, the CEI VSR 28G/100 Gigabit Ethernet link presents a challenging system level design. In this paper we describe the system design process which resulted in the industry’s first 100 Gigabit Ethernet CMOS PHY with Inphi’s GearBox and CDR chips. The jitter budget is tight and with all the variation possible in the system there are more than 5 million system conditions to check and verify performance at. Additionally, to ensure that the system is not over-designed or under-performing, the manufacturing variation impact on performance and manufacturing yield needs to be estimated. Achieving and optimizing all of these objectives simultaneously is indeed a serious challenge.

The next generation core routers and data centers require more bandwidth, faster speeds and lower power. This has forced the industry to implement line speeds as fast as 28 Gbps. An example topology of such interfaces is given below in Figure 1 and shows the chip to module application, where a pluggable optical transceiver CFP2 module is connected to a line card host IC. Don’t be fooled by the seemingly clean channel, there are plenty of impairments here and with a with a bit error ratio (BER) allowance of only one error in a quadrillion bits, this is a world class challenge.

Figure 1: 28G VSR host to module channel diagram.

Figure 1: 28G VSR host to module channel diagram.

The CMOS design allowed Inphi to reduce the power envelope to one third the power and half the area of competing SiGe and FPGA solutions.

One of the many challenge in the design process was to ensure that the transmitter and receiver were able to operate in a wide variety of channels, including some that exceeded the 28G VSR 10 dB host to module channel. Here Design of Experiments (DOE) and Response Surface Modeling (RSM) was used to determine the maximum allowable trace lengths, the best layer for PCB routing, the optimum via anti-pad size and the performance degradation due to the presence of manufacturing variance from IC process, voltage and temperature (PVT); package impedance; printed circuit board (PCB) impedance; and via stub length variations. Each of these design objectives will be addressed and we will illustrate how the DOE/RSM approach provides an informed path to answering them.

The trouble was, although each simulation of a system configuration took only 4.8 seconds to complete, with 5 million conditions to check it would require 278 days of compute time to complete! A large compute farm would help but could be easily overwhelmed with the computation and storage of the results if additional factors were added to the exploration space or if the simulations required more time to complete. The link simulation approached used was a fast analysis approach to estimate the 1e-12 BER for the link configuration. If a bit by bit time domain analysis was used to estimate performance then the simulation time could easily approach five minutes each. With this computational load, it would only require 47.5 years of compute time for the 5 million cases. Brute force analysis wasn’t going to be a very effective tool in this situation. We need to find another way.

Ideally, it would be wonderful if there was a magic equation which, given the input factors such as trace length, impedance and process corner, could reveal exactly what the resulting system performance would be. This magic equation would enable a multitude of analyses like optimization, virtual “what if” analysis and the ability to position the design to minimize the impact of manufacturing variance.

Well of course that isn’t exactly possible, but by using DOE with RSM we can approach this ideal. DOE is used to sample the factor space and RSM is utilized to create an equation (or model) which best fits the data. After verifying the correctness of the model we can utilize it to do all the things mentioned above, to optimize, perform “what if” analysis and to minimize the impact of manufacturing variation. The DOE/RSM flow is visualized in Figure 2 below which was made to emphasize the iterative nature of the analysis. Additionally, each phase of the analysis has certain assumptions which will need to be revisited and revised before a satisfactory result can be obtained.

Figure 2: Design of Experiments (DOE) and Response Surface Modeling (RSM) methodology flow.

Analysis Approach

The most important step of any type of analysis is to determine the objectives of the work. Albert Einstein allegedly stated that “If I had only one hour to save the world, I would spend fifty-five minutes thinking about the problem and only five minutes thinking about solutions”. Similarly, it is absolutely essential that the questions to be addressed and the level of accuracy of the answers be clearly defined before starting the actual work utilizing the DOE/RSM methodology. Clear analysis goals will put you in the best position to achieve your aim.

The DOE and RSM flow diagram emphasizes the iterative nature of the analysis as it allows for the refining of the assumptions at each step of the process. It can also be seen that without clear analysis goals and exit criteria, such an approach can result in loss of weekends and never ending reviews.

A statistical mindset to signal integrity, one in which decisions are made in the presence of uncertainty, is uncomfortable for some. Rather than relying on exhaustively certain analysis, the DOE/RSM analysis will provide answers couched between confidence intervals which indicate the accuracy of the results. The only difference between an exhaustively certain and statistical mindset is that in the former, any uncertainty is pretended away and in the latter, the uncertainty is quantified, scrutinized and communicated. There will always be uncertainty, whether we wish it or not and the best approach is to understand it, reduce it and embrace it. Get comfortable with uncertainty.

Please note that the JMP® statistical discovery software was utilized for the DOE creation and model fit analysis. Many of the figures in this document are from or derived from JMP® reports. The system link simulations were performed with SiSoft’s Quantum Channel Designer ® (QCD).

Assumptions

In addition to a mindset change, the DOE/RSM techniques require an in-depth knowledge of the related statistical concepts applied, which can be a challenge. The approach we will use in this paper is to first discuss some of the key concepts and the assumptions which go into them and then provide solid application examples of the methodology to the VSR 28G interface design.

In a general sense, to model an object is to utilize a simplified description of some aspect of interest which allows for an exploration of the object’s characteristics. A physical example would be the use of a model airplane in a wind tunnel to study its aerodynamic properties to aid in the design of a full size airplane. For us, the object to be modeled is a bit more ambiguous but typically is a response of the system performance such as eye height or width across the study factor ranges.

The modeling objective is to characterize the true response by taking samples of the factor space (as provided by the DOE table) and then fit a polynomial equation to the data. In this sense, the model is actually the equation which best fits the data. A visual example of such a model is shown below in Figure 3 where the eye height of a link is given versus trace length and impedance.

Figure 3: Example eye height response surface versus trace length and impedance.

Note that the model is merely an approximation of the actual response and only represents reality as far as it is accurate. Also, as can be seen from the curvature of the surface, this model is a two dimensional parabola which best fits the data. The model form and the coefficient estimates obtained from an ordinary least squares fit is given below in Equation (1):

Although this surface represents the best fit of the model to the data, it does not address the question: is this the best model to represent the ‘true response’? In this instance, adding higher polynomial terms for the length factor would likely capture better the resonant behavior of the trace. While finding the absolute best model is likely to be an elusive goal we can certainly approach it with incremental improvements to the model form. A ‘too simple’ model will result in the smoothing out of important response characteristics and a ‘too complex’ model can result in over emphasizing certain response features at the expense of other more important response characteristics.

One might think that to find the best model form one would simply apply a very large range of polynomial terms to the model fit to see which terms are significant. The difficulty with this approach is that the model form available for fitting is limited by the sampling of the response. If there are only two data samples along a factor dimension then at most a straight line can be fit to the data. If there are three data points then at most a quadratic line can be fit and further if there are at most four data points then a 3rd order polynomial can be fit. Thus at some point, given a fixed sampling set, there is a limit of what model is available to apply to the fit. These limitations lead to the idea that the sampling of the factor space must also take into account the model form so that the model fit can accurately estimate the true response.

Conceptually, with continuous factors one could iterate until the ideal sampling and model form is obtained to achieve a high quality model fit. In practice though, segments of the interconnect are often represented by blocks which may only have a discrete number of levels, such as would be the case from a family of connectors which are characterized by S-Parameter models. In these situations, the resolutions of the sampling are fixed and higher order system response characteristics are aliased with lower order system response characteristics, thus limiting the analysis.

The final aspect of the model assumption is the idea that a polynomial can adequately describe the response surface. There are other more mathematically sophisticated models, such as Gaussian Process modeling which have some surprising characteristics (such as zero residual and spatially cognizant interpolation) but will not be discussed here. The authors have found that the large majority of the signal integrity applications of the DOE/RSM methodology are adequately described by polynomial models.

The process of fitting the model to the data is typically performed by Ordinary Least Squares (OLS) regression analysis. This estimation method assumes that the input factors are uncorrelated, that the fit error variance does not vary across factors or factor levels and that the residual error is normally distributed. These assumptions can be relaxed if needed but require more sophisticated least square methods such as generalized linear models (allows for other residual error distributions beyond the normal distribution) and general least squares (allow for correlated factors and non-uniform error variances). In the analysis here we will make an extensive investigation of the residual to ensure that the model is adequate and that it meets the OLS assumptions.

Application of methodology to 28G VSR System Analysis

The whole objective of the study is to answer design questions and quantify manufacturing variance for the 28G VSR link design. The design questions are, which layer of the PCB is preferable for the high speed routing, what is the best PCB via anti-pad size and what is the max PCB trace length that can still pass with adequate system performance? These questions should be answered in the presence of manufacturing variation, which will be quantified as well.

The objectives will be obtained by carefully sampling the factor space with the Design of Experiments approach, evaluating the system performance response at these DOE conditions, fitting a response surface model to the data to produce a multi-dimensional equation which can then be used to study the total factor space. Even though this analysis description hints of a simple progression, in practice it is quite iterative as the assumptions at each stage are refined to obtain a satisfactory model fit with sufficient accuracy. It is desired that the uncertainty of any eye height and width predictions be less than +/- 30 mV and +/- 0.5 ps. Achieving the desired level of accuracy on the first pass rarely happens (it if does then it should raise your suspicions) but requires subtle modifications to the model assumption, factor ranges, and DOE creation.

Table 1 below lists the nine factors which define the space to be explored. The factors which directly influence design decisions are called design factors and the factors which in production are not controllable are called manufacturing factors.

|

Parameter Name |

Factor Type |

Min |

Typ |

Max |

|

Tx PVT Corner |

Manufacturing |

SS |

TT |

FF |

|

Tx PKG |

Manufacturing |

90 Ohm |

100 Ohm |

110 Ohm |

|

Line Card PCB |

Design |

32 mil |

36 mil |

40 mil |

|

Line Card PCB |

Manufacturing |

2 mil |

10 mil |

18 mil |

|

Line Card PCB Routing Layer |

Design |

3 |

-- |

9 |

|

Line Card length |

Design |

1 inch |

3 inch |

6 inch |

|

Line Card TL Impedance |

Manufacturing |

90 Ohm |

100 Ohm |

110 Ohm |

|

Rx PKG |

Manufacturing |

90 Ohm |

100 Ohm |

110 Ohm |

|

Rx PVT Corner |

Manufacturing |

SS |

TT |

FF |

Table 1: 28G VSR interface factor space definition.

Design the experiment

Traditionally there have been several approaches to sampling a large multi-dimensional factor space. Some of these approaches include an exhaustive sampling, where every single condition is evaluated; random sampling, where a number of conditions are chosen by chance; and one factor at a time sampling, where from a nominal condition, each factor is swept in isolation. The objective of any type of sampling is to obtain a representation of the total factor space such that by using statistics of the sample, inferences about the total factor space can be made. The exhaustive sampling approach is nice but more than likely an unrealistic approach. The random sampling approach (also called Monte-Carlo sampling) will likely be unbiased but does not guarantee coverage of the whole space and requires many sample points to ensure that all regions of the factor space are considered. Lastly, one factor at a time (sometimes called OFAT) sampling will miss out on many important factor interactions.

The design of experiments sampling approach attempts to sample the space to provide good coverage of the whole factor space while minimizing the number of runs. This is achieved by starting with a random sampling and then modifying each sample point until the coverage of the DOE set is adequate. The coverage of the sampling is quantified by the sample prediction variance which can be easily calculated with some straightforward matrix manipulations. This quantification of the prediction variance allows the uncertainty of the sampling to be quantified and thus optimized upon. This is why some call the DOE approach optimal design.

For our VSR example, the nine factors are sampled with 256 runs and the prediction variance of the sample is minimized with the D-optimal approach. D-optimal designs sample the edges of the factor space more than the center and give more accurate model parameter estimates than other optimality criteria. Since one of the objectives is to find the worst case conditions of the factor space (these typically occur at an edge of the space), accurate estimations of the edges and corners of the factor space is important. The model assumed for the sampling was a 2nd order polynomial with 1st order interactions between all factors.

Once the simulations are run and the model fit processed, the accuracy of the model fit predictions is quantified by a confidence interval. A smaller confidence interval will give more assurance than a larger one. The confidence interval size is dependent on three things, the desired confidence level (95%, 99%, 99.9% etc.), the model fit error and the coverage of the DOE sampling. At the DOE creation step of the analysis, knowledge of the coverage of the DOE sampling allows insight into the relative confidence interval size and is embodied by the prediction variance. While visualizing a 9 dimensional space is quite a feat, we can summarize the prediction variance across the whole space with a fraction of design space plot as shown in Figure 4 below.

Figure 4: Fraction of design space plot which shows the DOE prediction variance over the fraction of the space.

This plot shows that the relative prediction variance for 50% of the factor space is less than 0.19. While the relative nature of the metric does not lend itself to absolute guidelines, it allow for the comparison of competing designs. Thus it is recommended that a few designs be generated and then compared to select the best one.

An ideal design, sometimes known as an orthogonal design, is one where the parameter estimates are able to be calculated independently. This is only achievable for select designs and in most situations (given the number of runs and the model form) an orthogonal design is not possible. What is possible though, is for the design to approach the orthogonal characteristics. When a design is not orthogonal then two or more parameter effects are slightly correlated with each other and to that degree undistinguishable. The degree of correlation can be quantified for all 54 terms of our model in the color map on correlation plot in Figure 5 below. The names of each of the model terms are given for each column of the plot and are the same for each corresponding row. The color range in the plot goes from blue (un-correlation) to red (totally correlated). The red diagonal of the plot shows that each model term is perfectly correlated with itself as expected but most importantly there are no reddish off-diagonal terms which would be an indication of a poor design.

Figure 5: Color map on correlation plot for all of the terms in the response surface design. The correlation between two off-diagonal terms is ideally zero and is indicated by blue .

In order to put the upcoming model fit in the best possible position, the design of experiments sampling has found a sample set which adequately covers the factor space and allows for near independent estimation of the parameter effects.

Evaluate System Response

The DOE sampling conditions are brought into the EDA link simulation environment for the 28G VSR topology, simulated and link performance metrics calculated. The metrics which will be considered here are eye height and eye width at a BER of 1e-12. It is essential to check the simulation result waveforms for consistency and accuracy. It is recommended to check the outliers in the performance to ensure that they embody realistic results. It will be assumed during the model fit that each condition represents the actual response thus any discrepancies will propagate errors into the model fit and will result in poor or even wrong analysis conclusions. Below in Figure 6 is an example of how to visualize the results in SiSoft’s Quantum Channel Designer ® (QCD).

Figure 6: The simulation results must be carefully evaluated to ensure that all results are reasonable before proceeding with the model fit.

Response Surface Model Fit

All of the precautions taken up to this point have been done to obtain a good model fit. Once the fit is complete and its quality measured it will be determined whether those precautions were sufficient or if more iterations and refinements are necessary. The model form which we will utilize, called a Response Surface Model, is a multi-dimensional polynomial with interaction terms as shown in Equation (2) below. Here  is the measured response (such as eye height),

is the measured response (such as eye height),  is one of the n=9 factors and the

is one of the n=9 factors and the  ’s are the unknown model coefficients which will be estimated by the least squares method.

’s are the unknown model coefficients which will be estimated by the least squares method.

The difference between the simulated eye height and the eye height as predicted by the response equation is called the error residual. By examining the residual we can obtain several measures of model quality and validate assumptions.

Goodness of fit

The simplest fit metric is called the coefficient of multiple determination but everyone just calls it “R-squared” for short and is written as R2. This metric ranges from 0 for a poor fit to 1 for a good fit. Conceptually, 100*R2 can be thought of as the percentage of the variation in the data that can be explained by the model. One interesting fact is that the R2 metric will always improve if additional model terms are added whether or not these new terms are actually significant. A modified R2 metric, called R2 adjusted, takes into account the number of terms used in the model and penalizes for any extra unnecessary terms. Thus a large difference between the R2 and R2 adjusted is an indication that there are unnecessary terms in the model.

The error standard deviation of the fit can be estimated by taking the residual for each point in the data set, squaring it, finding the mean and then taking the square root. This RMSE metric can also be used as a quick estimate of the prediction confidence interval. For a 95% confidence interval estimate, simply multiply RMSE by 2. If +/- this value is larger than the needed accuracy then it will be necessary to go back and revisit earlier assumptions such as the model form used in the DOE creation and the factor space definition.

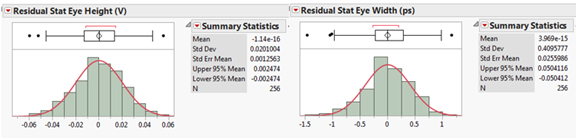

Figure 7: Goodness of fit summaries for eye height (left) and eye width (right).

Shown above in Figure 7, is the JMP statistical software fit summary for the eye height and eye width fit. We see the R2 and R2adjusted are in the high 90’s and the RMSE is 22 mV for eye height and 0.45 ps for eye width.

Lastly the fit error residual needs to be examined itself to ensure that it is normally distributed and that it does not contain any “structure”. Figure 8 below shows the residual distribution of the eye height and width which can be seen as roughly normally distributed.

Figure 8: Fit error residuals for eye height (left) and eye width (right). These show that the residuals are roughly normally distributed as required.

Plots of the residual versus the response or other important factors are the best way to search for “structure” in the residual. Structure, i.e. some systematic relation between the residual and some explanatory variable, is evidence of a model bias and can provide clues as to what new terms should be included in the model fit. Often such additional model terms are not able to be immediately utilized because of insufficient sampling of the DOE and require a reformulation of the DOE creation so that the additional model terms can be added without aliasing other important effects. Below in Figure 9 is the plot of the residual versus the response. The residual should be normally distributed no matter how it is viewed but it can be seen from the figure that the lowest predicted eye height cases have a positive residual as circled in the figure. Further investigation showed that an additional model term of PCB_LEN*PCB_Z*RX_CORNER improved the fit. Although there may be more such model terms which may improve the fit, the accuracy was sufficient for the needs of the study and the model fit was deemed good enough.

Figure 9: Residual versus the predicted eye height (left) and predicted eye width (right). Systematic structure in the residual is an indication of model bias. Note how the lowest eye height performance cases all have positive residuals, this observation lead to the inclusion of an additional model term which improved the fit.

While these are very good model fit results, it is interesting to think about the source of the remaining uncertainty. The residual error can only come from two sources, random errors and lack of fit. For deterministic signal integrity simulations there is no random noise so the residual is due solely to lack of fit. In practice, a perfect model fit is not achieved because the underlying phenomenon is not a perfect polynomial and also because the true underlying phenomenon factors are garbled and only imperfectly represented by the study factors.

Explore and Optimize the 28G VSR System

Once everything has been done to ensure a proper fit, we can explore the factor space as represented by the model with confidence that the uncertainty is roughly understood. In this application, visualizing a 10 dimensional space is a daunting task, fortunately there are some tools which facilitate this type of analysis. A plot, called the prediction profiler, shows what the response would be across each factor if all of the other factors are held constant. This type of plot is most useful when used interactively, but much can be gleaned from the static views used below as well. As an example, consider the prediction profiler plot for the two factors RSM fit as shown in Figure 10.

Figure 10: Example prediction profiler plot of the predicted eye height response. It shows the response versus each of the factors given all of the other factors are held constant.

This prediction profiler plot shows the eye height response across the explanatory factors, trace length (PCB_LEN) and trace impedance (PCB_Z). Since the slope of the trace length factor is greatest we can state that this is the most influential factor in this region of the factor space. If there are any interactions between the factors then the effective slope of the line could change in other areas of the factor space. Additionally, this plot indicates that when PCB_LEN=3.5 and PCB_Z = 100 then the predicted eye height is 0.538 V with a 95% confidence interval of [0.514, 0.562] V, which is equivalent to stating that the predicted eye height is 0.538 +/- 0.0234 V for a 95% confidence interval. The confidence intervals are represented in the prediction profiler plot by the blue dashed lines surrounding the solid black predicted response.

A confidence interval (sometimes abbreviated as CI) can be thought of conveying the following information:

- a CI provides a range of plausible values for the true response with values outside the range as relatively implausible, or

- a CI gives the precision of the estimation where the upper and lower bounds provide the likely maximum error of estimation, although there is a possibility of larger errors.

A confidence level of 95% roughly covers two standard deviations from the predicted value and a confidence level of 99.5% roughly covers three standard deviations from the predicted value. The prediction profiler plot for the middle of the example factor space is given in Figure 11.

Figure 11: Eye height (top row) and eye width (bottom row) Prediction Profiler for the middle of the factor space. Important factors can be identfied by the slope of the curves

As was noted before, the trace length is the most influential factor in this region of the space. The two rows of plots are for the eye height and eye width, respectively. If it was desired to understand the impact of a factor in this region of the space, this plot easily provides this information.

Because the fitted response surface is represented by a well-defined function, the space can be searched for the worst case conditions as shown in the prediction profiler plot in Figure 12.

Figure 12: Prediction profile plot for eye height (top row) and eye width (bottom row) at the predicted worst case condition.

It will be noted that while the trace length is still the most influential factor in this region of the space, the trace impedance and receiver PVT corner ($RX_CORNER) have also become somewhat influential as compared to their influence at the middle of the factor space.

Determining Routing Layer for High Speed Signals

The design optimization strategy used here is to first identify the worst case manufacturing condition and then put the design factors in their best case conditions to minimize the impact of the worst case performance.

To quantify the impact of the PCB routing layer at the worst case corner, the predicted response is calculated for PCB_LAYER=3 and PCB_LAYER=9 and compared. As shown in Figure 13 below (note the vertical axis has been scaled to provide a better view) the eye height and width difference between the two PCB layers is only 0.012 mV and 0.35 ps. Also note that the 95% confidence interval for eye height is +/-24 mV and for eye width, +/- 0.5 ps. When the predicted difference is less than the confidence interval then it can be stated that the model is unable to confidently identify the effect as significant and provides no actionable information. This could lead to the following conclusions:

- the PCB routing layer is a weak predictor of the system performance and layers 3 and 9 are equivalent, or

- PCB routing layer 9 has a slight but statistically insignificant advantage over layer 3.

If either of these conclusions is insufficient then additional information on the impact of the two layers will be required to make a definitive decision. A more focused DOE/RSM around this factor could be defined where some of the other insignificant factors are removed from the study to improve the accuracy of the analysis. Additionally, other influences on the routing layer decision, such as cost in PCB space or cost in money, should be considered when making any decisions.

For the VSR study, it will be concluded that PCB routing layer 9 has a slight advantage (although statistically insignificant) over layer 3.

Figure 13: Example of how to evaluate "what if" scenarios across the PCB layer factor. The plot on the left shows the predicted responses for when the PCB routing layer is on layer 3 and the plot on the right shows the predicted responses for when the PCB routing layer is layer 9.

Determining the best Via Anti-pad size

A similar approach will be taken to understand the impact of the PCB via anti-pad size. The link performance difference between an anti-pad diameter of 32 and 40 mil for eye height is 45 mV and 0.47 ps where the eye height confidence interval is +/- 25 mV and the eye width confidence interval is +/- 0.5 ps for a 95% confidence level. This can visually be seen from the prediction profiler plots in Figure 14 as the dashed red horizontal lines for the eye height plots are not contained within the dashed blue confidence interval lines whereas the dashed red lines are contained by the dashed blue confidence interval lines for eye width. Therefore we can conclude that an anti-pad size of 32 mils is a better solution for eye height and is possible to be a better solution for eye width performance but is statistically insignificant. Additional constraints, such as increased manufacturing problems with fabricating a given anti-pad diameter should be carefully weighed with the performance benefits before making a final decision.

For this study, an anti-pad size of 32 mils will be used in future analysis.

Figure 14: "What If" analysis across the via anti-pad factor showing the performance difference between different via anti-pad sizes.

One important point to clarify is that while the DOE/RSM methodology can provide a quantification of the impact of a given factor, it cannot give any indication as to why. The reasons why a factor is impactful in a given situation must come from subject matter expertise and engineering judgment. If no satisfactory physical explanation is forthcoming, then a statistical indication of importance may be the impetus for further analysis and it can be useful to make hypotheses about competing physical explanations of the data.

Manufacturing Variation

One approach to quantify the manufacturing variation is to assign probability distribution functions (PDFs) to each of the factors and then randomly generate millions of cases. Utilizing the response surface equation, the system performance can be quantified for each of the random cases and used to give an indication of the probability of yielding a certain system performance level. Much care must be taken to obtain accurate PDFs as the weights of the tails can make a large difference in such analysis. Figure 15 shows the prediction profiler plot and the PDFs assigned to each of the manufacturing factors.

Figure 15: Prediction profiler plot with distributions assigned to each of the manufacturing factors. Randomly sampling the factor space according to these distributions will give manufacturing Yield information.

It was desired to understand the manufacturing variation as a function of the line card trace length. Therefore, for each of the line card lengths of 1, 2, 3, 4, 5 and 6 inches, one million cases were randomly generated and eye height and width performance calculated using the response surface equation. The average and standard deviation of the one million cases for each of the lengths are given below in Figure 16. This analysis clearly shows that for eye height the impact of manufacturing variation increases with higher line card trace lengths. A possible explanation for the increase isn’t that more manufacturing variation happens when the line card trace length equals 6 inches but that the system is much more susceptible to said variations. It should also be noted that the eye width variation changes very little with increased trace length.

Figure 16: Manufacturing Yield predictions versus maximum allowed trace length. The vertical bars indicate the standard deviation of each yield analysis and shows increased eye height variability with increased trace length.

Defects per Million Analysis

The final piece of analysis is an estimation of the defects per million. Given that the factor PDFs and response surface equations are accurate, the manufacturing yield of the system can be calculated. The pass/fail criteria for this analysis depends on which link uncertainties are included in the simulation, and which are budgeted. For this example, the spec limits for a passing system are an eye height of 200 mV and eye width of 19 ps. For a system with line card lengths of 6 inches, the yield distribution plots for eye height and width are given in Figure 17 below.

Figure 17: Defects Per Million (DPM) analysis showing a predicted 225 and 170 DPM according to the eye height and eye width requirement, respectively.

Here the lower spec limit (LSL) is plotted on the distributions and the percentage of the distribution below this limit and the corresponding parts per million (PPM) values of 224 and 170 defects per million are shown.

Conclusion

We have shown the methodology which assisted in the first CMOS 28G VSR / 100G Ethernet PHY design. The interface design questions: what layer of the PCB to route on, what size of via anti-pad to use and what maximum trace length to allow were addressed. The worst case conditions were identified and impact of manufacturing variations on performance was quantified. All of which was accomplished with the DOE/RSM methodology. Instead of simulating millions of conditions requiring months of compute time, the DOE intelligently sampled the factor space with only 256 runs. A least squares model fit found a response surface which best fit the data and after the model was validated it was used to predict system performance throughout the factor space. It must be emphasized that several iterations of the methodology were required as the model assumption changed. Overall, the DOE/RSM methodology has been shown to be a powerful approach to comprehend and optimize a dizzyingly large factor space and contributed to Inphi’s success with the world’s first production ready 100G CMOS PHY/SerDes Gearbox.

References

Hall, S. & Heck H. (2009). Advanced Signal Integrity for High-Speed Digital Designs.

Montgomery, D. (2009). Design and Analysis of Experiments, 7th Edition.

Goos, P. & Jones, B. (2011). Optimal Design of Experiments: A Case Study Approach.

www.JMP.com

Biographies

Richard Allred is a Senior Member of Technical Staff at SiSoft. Previously, Richard worked at Inphi where he was responsible for Inphi’s 100G Ethernet PHY (28G per lane) front plane interface. In the course of that work, Richard contributed to IEEE 802.3 and OIF-28G-VSR standards discussions on next generation Ethernet. Before that he worked at Intel, contributing to signal integrity methodology and tool development for GDDR5/DDR3. He used Design of Experiments and Response Surface Modeling in the course of this work to predict link performance across high volume manufacturing. Richard received his MSEE from University of Utah, and has 4 publications.

Barry Katz, President and CTO for SiSoft, founded SiSoft in 1995. As CTO, Barry is responsible for leading the definition and development of SiSoft’s products. He has devoted much of his efforts at SiSoft to delivering a comprehensive design methodology, software tools, and expert consulting to solve the problems faced by designers of leading edge high-speed systems. He was the founding chairman of the IBIS Quality committee. Barry received an MSEE degree from Carnegie Mellon and a BSEE degree from the University of Florida.

Ishwar Hosagrahar is a Senior Staff Engineer at Inphi, with over 17yrs of industry experience working with Networking & Communication Circuits and Systems. Prior to Inphi, he worked at Texas Instruments on products ranging from 10/100Mbps Ethernet PHYs to 15+Gbps SerDes transceivers. Before that, he worked at ArcusTech (later acquired by Cypress Semiconductor) designing Ethernet and Telecom switch ICs. He holds a Master's degree in VLSI/Circuit design from University of Texas. In his spare time, he enjoys flying planes (and anything aviation-related), plays various musical instruments (albeit somewhat poorly) and dabbles in hi-fi systems.

Chao Xu is a Sr. Director of Platform Engineering at Inphi. He is specialized in integrated circuits design and architecture related to Computing Architecture, Memory Architecture, High Speed Digital Communication and Digital Signal Processing in Server and Data Communication areas. He has extensive experience in system signal integrity in high speed channel analysis and implementation, high speed mixed signal integrated circuit designs such as SERDES, PLLs. RF Transceivers, Optical transceivers etc. Chao received his Ph.D. degree in Electrical Engineering from University of Pennsylvania. He has more than 10 issued US patents.

Wiley Gillmor is a Principal Engineer engaged in software development at Signal Integrity Software, Inc. His career in EDA has spanned over 35 years, focusing on tools for physical design and engineering. Prior to that he had a brief academic career teaching Mathematical Logic and Computer Science.

Authors Biography

Richard Allred is a Senior Member of Technical Staff at SiSoft. Previously, Richard worked at Inphi where he was responsible for Inphi’s 100G Ethernet PHY (28G per lane) front plane interface. In the course of that work, Richard contributed to IEEE 802.3 and OIF-28G-VSR standards discussions on next generation Ethernet. Before that he worked at Intel, contributing to signal integrity methodology and tool development for GDDR5/DDR3. He used Design of Experiments and Response Surface Modeling in the course of this work to predict link performance across high volume manufacturing. Richard received his MSEE from University of Utah, and has 4 publications.

Barry Katz, President and CTO for SiSoft, founded SiSoft in 1995. As CTO, Barry is responsible for leading the definition and development of SiSoft’s products. He has devoted much of his efforts at SiSoft to delivering a comprehensive design methodology, software tools, and expert consulting to solve the problems faced by designers of leading edge high-speed systems. He was the founding chairman of the IBIS Quality committee. Barry received an MSEE degree from Carnegie Mellon and a BSEE degree from the University of Florida.

Ishwar Hosagrahar is a Senior Staff Engineer at Inphi, with over 17yrs of industry experience working with Networking & Communication Circuits and Systems. Prior to Inphi, he worked at Texas Instruments on products ranging from 10/100Mbps Ethernet PHYs to 15+Gbps SerDes transceivers. Before that, he worked at ArcusTech (later acquired by Cypress Semiconductor) designing Ethernet and Telecom switch ICs. He holds a Master's degree in VLSI/Circuit design from University of Texas. In his spare time, he enjoys flying planes (and anything aviation-related), plays various musical instruments (albeit somewhat poorly) and dabbles in hi-fi systems.

Chao Xu is a Sr. Director of Platform Engineering at Inphi. He is specialized in integrated circuits design and architecture related to Computing Architecture, Memory Architecture, High Speed Digital Communication and Digital Signal Processing in Server and Data Communication areas. He has extensive experience in system signal integrity in high speed channel analysis and implementation, high speed mixed signal integrated circuit designs such as SERDES, PLLs. RF Transceivers, Optical transceivers etc. Chao received his Ph.D. degree in Electrical Engineering from University of Pennsylvania. He has more than 10 issued US patents.

Wiley Gillmor is a Principal Engineer engaged in software development at Signal Integrity Software, Inc. His career in EDA has spanned over 35 years, focusing on tools for physical design and engineering. Prior to that he had a brief academic career teaching Mathematical Logic and Computer Science.

Authors Biography

Richard Allred is a Senior Member of Technical Staff at SiSoft. Previously, Richard worked at Inphi where he was responsible for Inphi’s 100G Ethernet PHY (28G per lane) front plane interface. In the course of that work, Richard contributed to IEEE 802.3 and OIF-28G-VSR standards discussions on next generation Ethernet. Before that he worked at Intel, contributing to signal integrity methodology and tool development for GDDR5/DDR3. He used Design of Experiments and Response Surface Modeling in the course of this work to predict link performance across high volume manufacturing. Richard received his MSEE from University of Utah, and has 4 publications.

Barry Katz, President and CTO for SiSoft, founded SiSoft in 1995. As CTO, Barry is responsible for leading the definition and development of SiSoft’s products. He has devoted much of his efforts at SiSoft to delivering a comprehensive design methodology, software tools, and expert consulting to solve the problems faced by designers of leading edge high-speed systems. He was the founding chairman of the IBIS Quality committee. Barry received an MSEE degree from Carnegie Mellon and a BSEE degree from the University of Florida.

Ishwar Hosagrahar is a Senior Staff Engineer at Inphi, with over 17yrs of industry experience working with Networking & Communication Circuits and Systems. Prior to Inphi, he worked at Texas Instruments on products ranging from 10/100Mbps Ethernet PHYs to 15+Gbps SerDes transceivers. Before that, he worked at ArcusTech (later acquired by Cypress Semiconductor) designing Ethernet and Telecom switch ICs. He holds a Master's degree in VLSI/Circuit design from University of Texas. In his spare time, he enjoys flying planes (and anything aviation-related), plays various musical instruments (albeit somewhat poorly) and dabbles in hi-fi systems.

Chao Xu is a Sr. Director of Platform Engineering at Inphi. He is specialized in integrated circuits design and architecture related to Computing Architecture, Memory Architecture, High Speed Digital Communication and Digital Signal Processing in Server and Data Communication areas. He has extensive experience in system signal integrity in high speed channel analysis and implementation, high speed mixed signal integrated circuit designs such as SERDES, PLLs. RF Transceivers, Optical transceivers etc. Chao received his Ph.D. degree in Electrical Engineering from University of Pennsylvania. He has more than 10 issued US patents.

Wiley Gillmor is a Principal Engineer engaged in software development at Signal Integrity Software, Inc. His career in EDA has spanned over 35 years, focusing on tools for physical design and engineering. Prior to that he had a brief academic career teaching Mathematical Logic and Computer Science.