In communication systems, information theory, and coding theory, forward error correction (FEC) is a technique used for controlling errors in data transmission over unreliable or noisy communication channels. FEC owes its beginnings to the pioneering work of Claude Shannon in 1948 on reliable communication over noisy transmission channels [1]. Shannon’s central theme was that if the signaling rate of the system is less than the channel capacity, reliable communication can be achieved if one chooses proper encoding and decoding techniques.

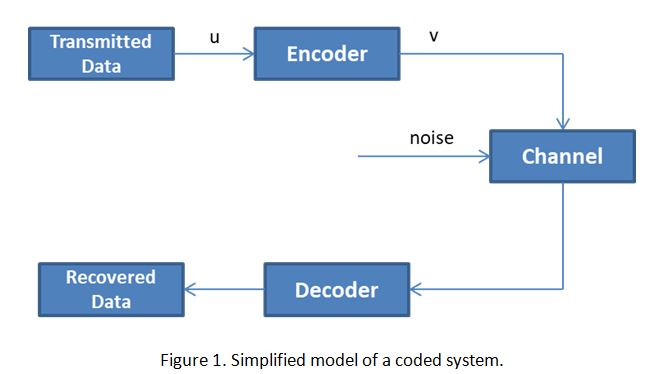

Figure 1 shows a simplified model of a coded system. The raw transmission data is represented as a message sequence u. The FEC encoder transforms the message u into a codeword v by adding redundant data, prior to entering the unreliable or noisy channel. The added redundancy allows the receiver decoder to detect a limited number of errors that may occur in the message, and often to correct these errors without re-transmission, with the goal that the original message sequence u is recovered successfully at the output of the decoder.

Types of FEC codes

Two structurally different types of codes are in common use today: block codes and convolutional codes. The encoder for a block code divides the information sequence u into message blocks of k information bits (symbols) each and transforms each message u independently into a codeword, n-bit (symbols) v. The ratio R = k/n is called the code rate. The redundant bits (symbols), n-k, provide the code with the capability of combating the channel noise.

An important parameter of a block code is the minimum distance, dmin, this is the distance between two closest codewords, which represents the minimum number of data changes required to alter one valid codeword into another. This parameter determines the error detecting and correcting capabilities of a code. Normally a FEC code is able to detect dmin-1 errors per codeword and correct up to (dmin-1)/2 errors per codeword. For example, Reed Solomon code, RS (544, 514, t=15, m=10), is a block code with 514 information symbols and 30 redundant symbols. Each symbol has 10 bits. Its minimum distance is dmin=31 such that it can correct up to (dmin-1)/2=15 symbol errors per codeword.

The encoder for a convolutional code also accepts k-bit blocks of the information sequence u and produces an encoded sequence v of n-symbol blocks. However, each encoded block depends not only on the corresponding k-bit message block at the same time unit but also on m previous message blocks. Besides redundant bits, n-k, more redundancy is added by increasing the memory order m of the code to achieve reliable transmission over a noisy channel.

Based on Shannon theory [1], the longer the codeword is the more powerful error correcting capability it provides. However, the coding complexity increases with codeword length too. To achieve better trade-off between complexity and coding performance, there are a few techniques for constructing long powerful codes from short components codes, such as product codes, concatenated codes and interleaved codes.

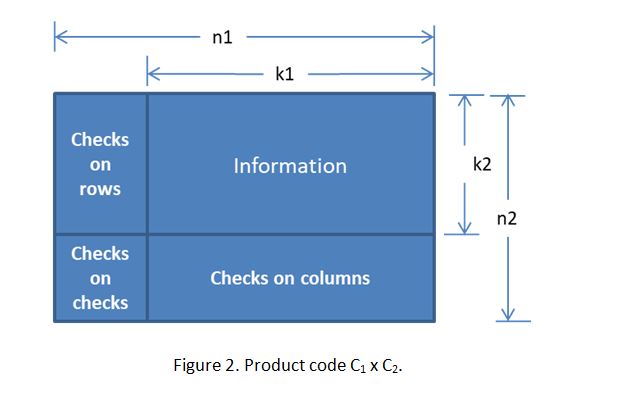

Figure 2 shows a two-dimensional product code formed by two codes C1 (n1, k1) and C2 (n2, k2) with minimum distance dmin1 and dmin2, respectively. Each row of the product code C1 x C2 is a codeword in C1 and each column is a codeword in C2. The product code is capable of correcting any combination of (dmin1dmin2-1)/2 errors.

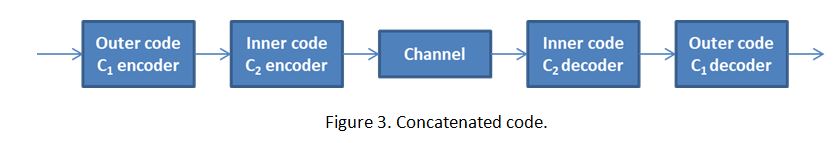

Figure 3 shows a one-level concatenated code with an outer code C1 (n1, k1) with minimum distance dmin1 and an inner code C2 (n2, k2) with minimum distance dmin2. The minimum distance of their concatenation is at least dmin1dmin2.

Figure 4 shows transmission of an interleaved code. Given an (n,k) block code C, it is possible to construct a (λn, λk) block code by interleaving, that is simply by arranging λ codewords in C into λ rows of a rectangular array and then transmitting the array column by column. Even though the minimum distance of the interleaved code is still dmin as individual code C, it can break the long burst errors into λ different codewords.

More advanced FEC codes, such as turbo codes [2] and low-density parity-check (LDPC) codes [3], have been invented by academics and adopted by industry in the last several decades to approach Shannon limit (or channel capacity) [1]. However, their excellent performance gains are normally paid by large encoding/decoding complexity and latency.

There are four critical factors to consider when selecting a proper FEC code and coding scheme for a particular communication system. To maintain high throughput or avoid significantly increasing link rate the code rate needs to be high. To compensate channel loss or relax signal to noise ratio (SNR) or bit error rate (BER) requirements at decision slicers in the receiver a large coding gain is desirable. However the drawbacks of the FEC are the coding latency and coding complexity that will increase transmission time and system power/cost.

FEC Applications to Serial Link Systems

The landscape of FEC technology for wire line communication systems is shown in Figure 5 (from [4]) and includes both electrical and optical links. For electrical links, the industry recently incorporated signaling format updates from two-level signaling format (NRZ) to four-level signaling format (PAM4) during the transition from 25 Gb/s to 50 Gb/s link data rates.

One of the major design challenges of PAM4 SerDes is the detection penalty of PAM4 over NRZ, about 9.54 dB or even larger if considering horizontal margin degradation due to multi-level signal crossings. Therefore, FEC becomes an important part of the PAM4 system solution to offset this detection penalty. RS (544, 514, 15) FEC, also known as KP4 FEC, has been widely adopted in PAM-4 links. It provides 200/400G Ethernet systems with up to 7dB coding gain, while adding a latency penalty of hundreds of nano-seconds (ns) as a cost. High-gain FEC codes such as low density parity check (LDPC) codes and Turbo product codes (TPC) are normally considered for long-distance optical transmission systems with the cost of larger coding latency and complexity. For low latency applications, short simple block codes with a moderate coding gain and complexity could be used.

References

[1]. C. E. Shannon, “A Mathematical Theory of Communication,” Bell Syst. Tech. J., pp. 379-423 (Part 1); pp. 623-56 (Part2), July 1948.

[2]. C. Berrou, A. Glavieux, and P. Thitimajshima, “Near Shannon Limit Error-Correcting Coding and Decoding: Turbo Codes,” Proc. IEEE Intl. Conf. Commun. (ICC 93), pp. 1064-70, Geneva, Switzerland, May 1993.

[3] R. G. Gallager, “Low Density Parity Check Codes,” IRE Trans. Inform. Theory, IT-8:21-28, January 1962.

[4]Y. Lu, “High Gain Low Complexity Low Latency FEC Codes for Ethernet and Backplane Applications”, DesignCon 2018

[5] S. Lin and D. Costello, Error Control Coding, Prentice Hall, February 2002.